I had a grand idea, as I usually do, and decided to execute it swiftly. My wallet feels pain when my mind gets on its singular track, but there isn’t anything that can be done to steer it off.

2020 is quite possibly the year of the Linux gaming desktop. Let’s explore.

Why would you do this to yourself

Why? You had an eGPU setup that was working fine, right?

Not exactly. Sure, the power issues were resolved with the 100W Wacom charger. OpenRazer ended up supporting the model I got. Someone even wrote a driver for changing performance modes, previously thought to be Windows-only via Synapse, an amazing feat I commended them for after I tried to write directly to the EC to no avail.

After about half a year on the setup, the laptop would exhibit odd behavior. The builtin screen developed a sort of “burn-in” that wasn’t actual burn-in. When switching discrete GPUs, or sometimes just on power-up, the previous image would stay on the screen and flicker. Here are some videos and photos which might help explain.

There’s no way that’s a software issue. You can’t convince me. It’s hard to see, but that’s text from the previous boot flashing.

I sent it off to Razer, where they plugged it into their “comprehensive” diagnostic suite that “checks every part of the system”, which apparently just amounts to an underpaid worker plugging a flash drive in that has some bullshit driver updater on it. I got a sheet of paper that said “PASS” on everything - despite me writing a nice handwritten note that told them exactly how to reproduce the error (though it could be a little finicky sometimes), and what I thought my diagnosis was. Just fucking replace the mainboard, please, dear god. Nope.

Okay but that doesn’t explain why you’re building a desktop and not buying a new laptop

Lay off me! Gaming laptops are fucked. Too much heat to dissipate. I refuse to buy another Razer laptop - my roommate also had graphical issues that could only be attributed to hardware after trying a Blade Stealth with an eGPU.

If I buy another laptop, it will be with one fucking GPU. If I try this again, the main GPU in there better be good enough that I don’t have to rely on both Nvidia and Razer not fucking up. Give me mobile Ryzen and Vega. Give me USB 4, so we can have external GPUs without buying lntel laptops only. We’re still a ways out, though.

The only way to pick your components and ensure a good graphics experience on Linux in this day and age is with a desktop or with an iGPU. Unless you’re dedicated enough to dremel out your ThinkPad chassis, in which case, bravo.

And even then it’s a bit rough. Here’s the build I went with, initially. It ticks all the boxes, and most off-the-shelf hardware is compatible with Linux. Small form factor, a Ryzen CPU, and quiet.

Part 1 - Stability vs Power

I love the concept of open graphics drivers. I think it’s wonderful and amazing, but driver developers will never be paid well enough for all the bullshit they endure, and I worry for their sanity. Valve’s efforts in developing an alternative backend shader compiler called ACO sought to be the release from stutter that I’d been enduring. Vulkan was the hot new future, fuck OpenGL, and who needs Nvidia’s binary blob driver performance when you have a nice new API to build out in open source code.

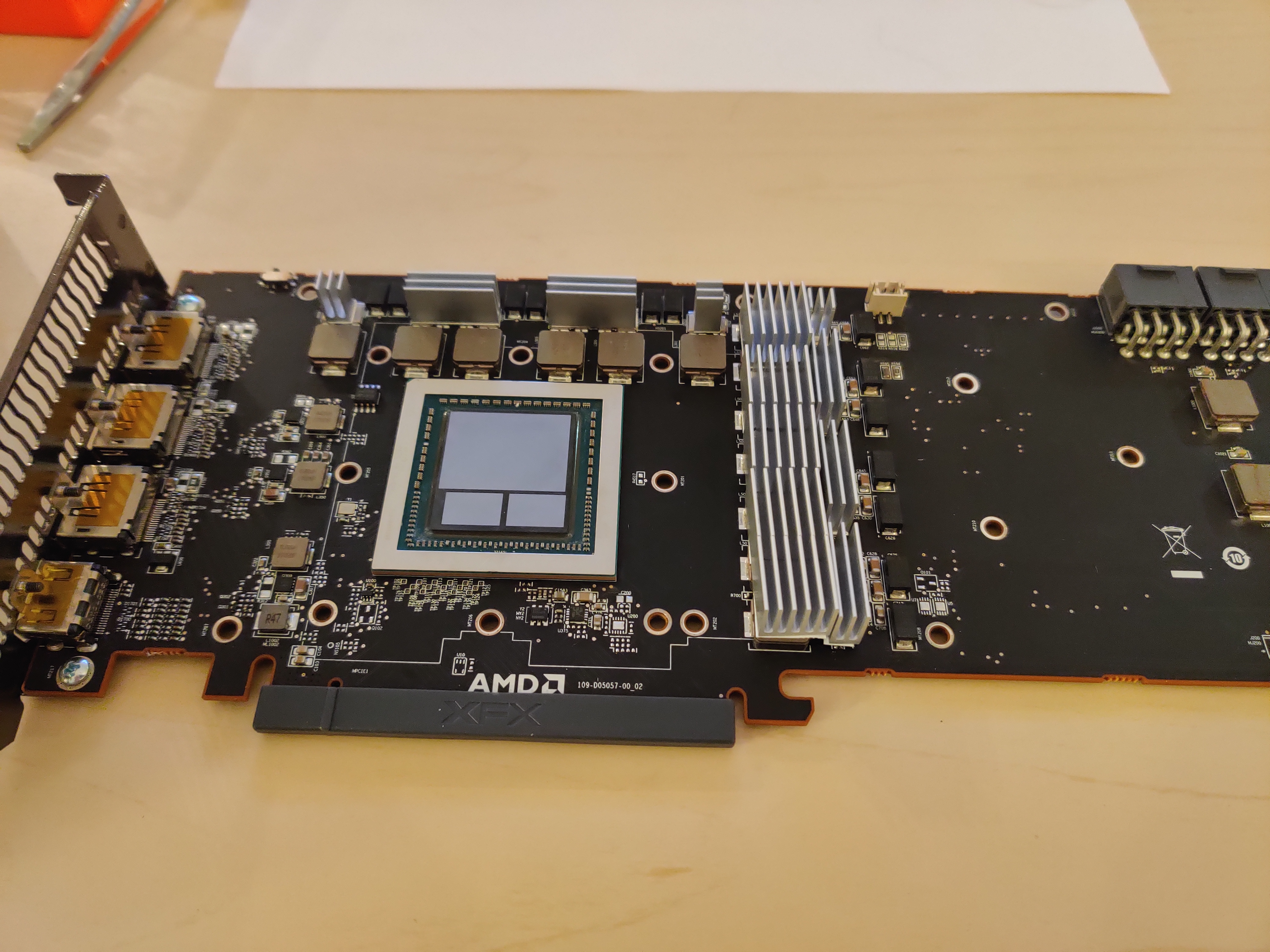

AMD recently released their 5700 series “Navi” architecture cards, to much fanfare. The idea of the 5700XT appealed greatly to me. It was the mostly-high-end-but-not-quite-high-end-enough-to-compete-with-the-2080ti, and that was good enough for me.

Good lord. I started to dive into what it would take to get one up and running. I hadn’t considered buying a graphics card in years since I got my MSI Seahawk 1080 (which is still a tremendously performing card on all fronts, though fuck Nvidia).

tl;dr:

- The reference card is trash unless you want a loud blower cooler, so buy a third party one. Alright, no big deal.

- The third party cards that are best at cooling are the Powercolor Red Devil or the Sapphire Nitro+, so spend $440.

- Update mesa and the kernel because Navi drivers aren’t stable enough yet.

- Have your desktop lock up.

- Be unable to adjust clocks and voltages.

- Have your cursor stutter randomly at high refresh rates.

- Buy a Vega 64 off craigslist instead.

This was not a great experience. I had thought that going AMD would give me the best Linux experience available, but I’ve come to understand in recent months that Nvidia more frequently just worked for me. Though, there are a few cases where AMD cards edge out, which we’ll discuss later.

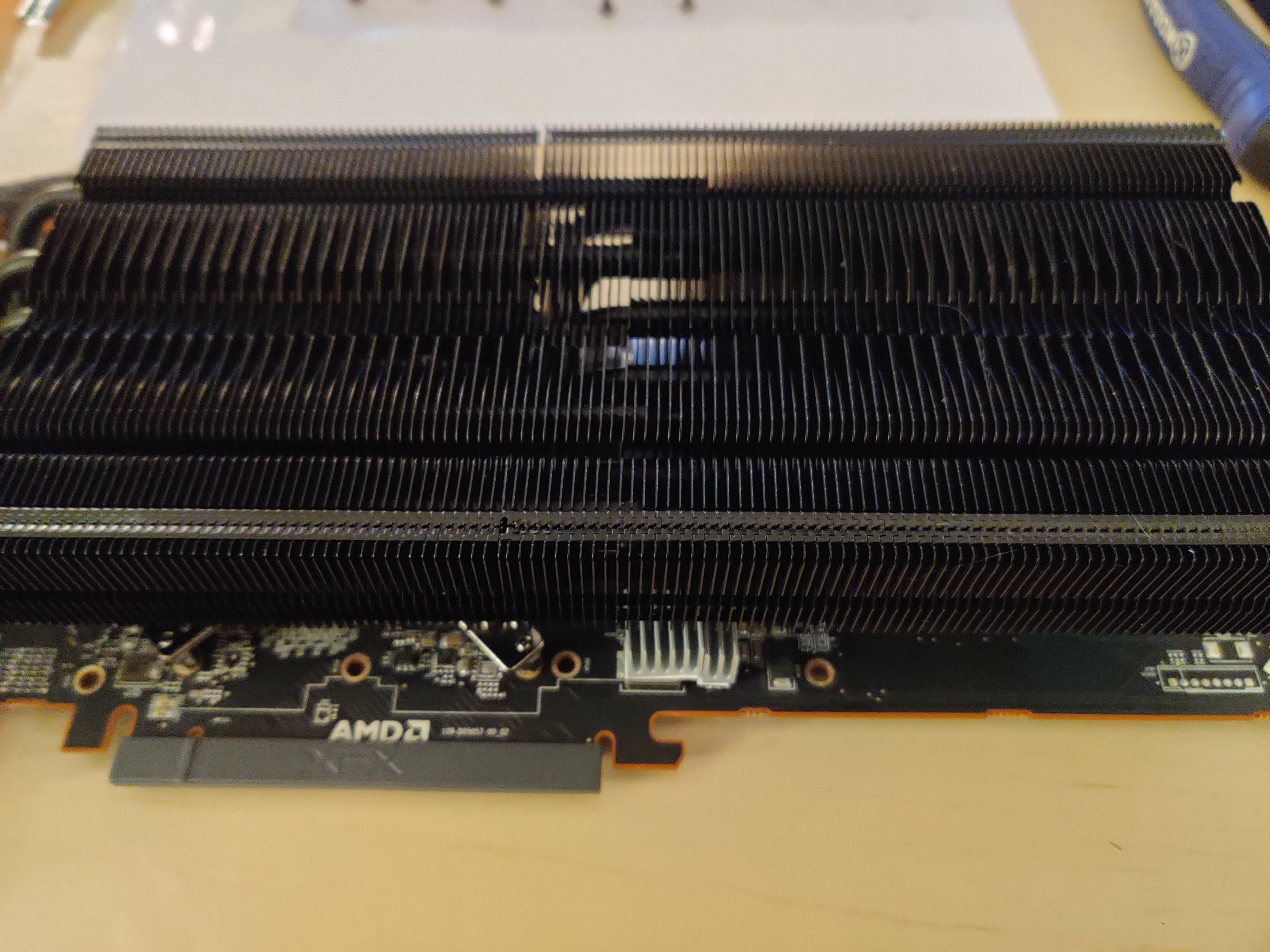

Because the Vega 64 reference cooler was trash, too, I ended up replacing it with the Morpheus Vega and some Nocutas. It stuck out of my case, but whatever. I just wanted a quiet, stable card.

You know what else wasn’t a good experience? Writing my own Xorg config. But I have done it before, so whatever.

/etc/X11/xorg.conf.d/20-amdgpu.conf

Section "Device"

Identifier "AMD"

Driver "amdgpu"

Option "TearFree" "true"

EndSection

Section "Monitor"

Identifier "DisplayPort-1"

Modeline "3440x1440_100" 531.52 3440 3448 3480 3520 1440 1496 1504 1510 +hsync -vsync

Option "PreferredMode" "3440x1440_100"

EndSection

Section "Monitor"

Identifier "HDMI-A-0"

Modeline "1920x1080_60" 148.50 1920 2008 2052 2200 1080 1084 1089 1125 +hsync +vsync

Option "PreferredMode" "1920x1080_60"

Option "Rotate" "right"

Option "RightOf" "DisplayPort-1"

EndSection

The modeline for 100hz 3440x1440 as output by X is called “3440x1440"x100.0, but I couldn’t invoke it by calling it by that name in the config with PreferredMode. So I just copied the values and renamed it to “3440x1440_100”.

Part 2 - Compatibility

So! Now that we’ve got the GPU out of the way, let’s discuss actually gaming on Linux.

Ideally, all games would be built for Linux as well as Windows, and in doing so, developers could target open source graphics APIs, like OpenGL or Vulkan, for cross-platform compatibility. However, the reality of things is that Microsoft has its hand in the industry, and so developers usually target DirectX. It’s where the majority market share is, so it’s where the money is.

Plus, actually supporting Linux is kind of a nightmare, since you have to either ensure the user has the runtime libraries you need to run on their system, or pack your own. Most games on Windows solve this problem by bundling the DirectX runtimes with the game, but watch a Valve developer discuss the workarounds necessary for library compatibility via isolating games on Linux in containers. It’s horrifying.

Unless a drastic mindset shift happens, maybe towards distributing games via snap/flatpak/etc on Linux (or more devs target Stadia because it uses Vulkan and then it’s easier to pivot to desktop Linux), we have to contend with this exclusivity. Luckily, because people are awesome, there are solutions.

(By the way, I’m using Arch Linux ;^) - most of the tools I’ll discuss in this post are available on the AUR and I’ll provide links to them.)

Proton

Proton has come a long way, dragging Wine along with it into the future of better game compatibility.

Wine is how we can translate Windows API calls into POSIX calls. It supports some of DirectX, but not all of it. To go a step further, Proton patches Wine and bundles DXVK, which is a way to translate DirectX 9, 10, and 11 calls into Vulkan calls. The Vulkan API is implemented in the mesa open source graphics driver library.

All of this means that you should be able to have a ton of games Just Work™ on Linux in 2020. If you have Steam, you can launch games with Proton there. If you have a non-Steam game or don’t have Steam, you can use Lutris - a game launcher that uses community-sourced installer scripts to apply fixes or install dependencies (and yes, it lets you use Proton as well, or you can go a step further with custom Wine builds, more on that later).

Finally, check ProtonDB or the Lutris site before you buy a game to see how well it runs on Linux.

Compatibility Gotchas

It’s not always so easy, for example - Anything that uses Windows-exlusive video libraries can have a hard time. Additionally, some games require a custom version of Wine or Proton with patches applied. There are a lot of per-game fixes that are required in these compatibility tools. If you have doubts, just look at the changelogs for DXVK releases.

Part 3 - Hardware Tweaks

If there’s one thing I hate, it’s hardware that has Windows-exclusive control software. Luckily, where something is closed-source, there’s going to be someone to reverse engineer it.

CPU Fan & Pump Control

In my build, I have a Kraken x62 as the CPU cooler. Given the lack of fan headers on the motherboard, I have it controlling all four case fans as the CPU fan. A bit of an odd configuration, but it seems to work alright. I chose the x62 because of its compatibility with my case, and I kind of liked that infinity mirror design, but a good chunk of reviews on Amazon talk about how garbage the “CAM” software is for managing it.

Well, on Linux, someone has done the hard work of reverse engineering the protocol (it just talks over an internal USB header) and created liquidctl. You can use it to send commands to the pump to adjust speeds for the fan and pump, or change the colors and animations. Incredibly easy to set up. It can be started using systemd:

/etc/systemd/system/default.target.wants/liquidcfg.service

[Unit]

Description=AIO startup service

[Service]

Type=oneshot

ExecStartPre=/bin/sleep 10

ExecStart=liquidctl set pump speed 90 30 100 50

ExecStart=liquidctl set fan speed 20 30 30 50 34 75 40 80 50 100

ExecStart=liquidctl set ring color fading 0c00f7 8800f7 --speed slowest

ExecStart=liquidctl set logo color off

[Install]

WantedBy=default.target

Interestingly, systemd inits too fast and launches liquidctl before all of my USB and PCI devices are enumerated. I can’t for the life of me figure out how to block until they become available using just systemd, but maybe I could use udev to detect it. Oh well. For now, I added ExecStartPre=/bin/sleep 10 to the systemd services files for liquidctl, amdgpu-clocks, and amdgpu-fancontrol.

GPU Fan Control

Since I have a custom cooler with aftermarket fans, I needed a way to control fan curves.

amdgpu-fancontrol let me mess with the PWM values to blast the Noctuas at max when the GPU hits 45C since they’re super quiet.

~/.config/amdgpu-fancontrol/amdgpu-fancontrol.cfg -> /etc/amdgpu-fancontrol.cfg

# Temperatures in degrees C * 1000

# Default: ( 65000 80000 90000 )

#

TEMPS=( 35000 45000 )

# PWM values corresponding to the defined temperatures.

# 0 will turn the fans off.

# 255 will let them spin at maximum speed.

# Default: ( 0 153 255 )

#

PWMS=( 89 255 )

It’s also started via a systemd service.

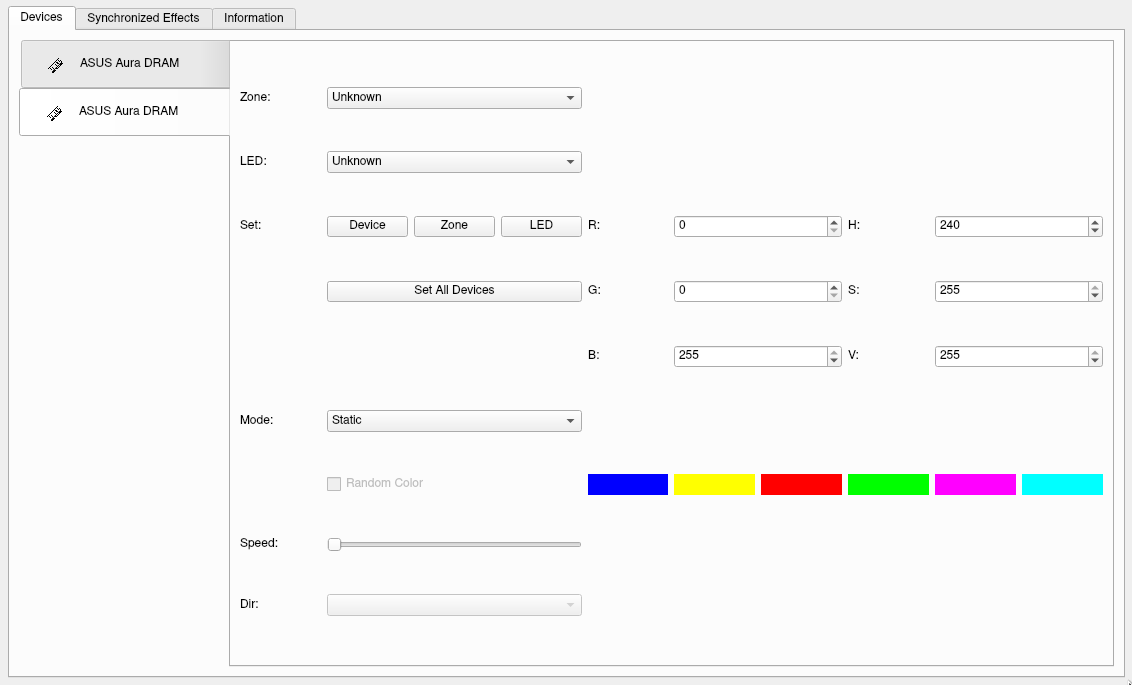

LED Control

After my Gigabyte motherboard’s USB 3 controller stopped working, and Gigabyte refused to fix the board (That’s two RMAs and zero fixes, folks), I decided to go for the only other reasonable Mini ITX AM4 board, the Asus ROG Strix X570-i.

This opened me up to the possibility of using OpenRGB to light my RAM. And it just works! Download and compile it if it supports your hardware.

Though, it’s not persistent yet, and can’t be launched via cli on startup, but I’ll be adding it to my startup scripts when it does.

Overclocking / Undervolting

- GitHub: https://github.com/sibradzic/amdgpu-clocks

- GitHub: https://github.com/BoukeHaarsma23/WattmanGTK

(Once again, the Arch Wiki has a great resource…)

I wanted to try and push my Vega 64 a bit further, so I dove into overclocking a bit.

On Linux, you’ll probably want to use WattmanGTK to experiment with clock values and states. Oh, and don’t forget to pass amdgpu.ppfeaturemask=0xfffd7fff as a kernel parameter in Grub or whatever bootloader you use.

Once I had values I liked, I used amdgpu-clocks to set the custom states.

~/.config/amdgpu-clocks/amdgpu-custom-state.card0 -> /etc/default/amdgpu-custom-state.card0

# Slight core overclock, slight undervolt states 6,7

# stock s6: 1536Mhz, 1150mV

# stock s7: 1636Mhz, 1200mV

# Max out power cap to 330W so the card spends more time on high states

# Set custom GPU states:

OD_SCLK:

6: 1552MHz 1100mV

7: 1675MHz 1150mV

# Force power limit (in micro watts):

FORCE_POWER_CAP: 330000000

I didn’t get much extra perf out of my card, unfortunately, but I was able to optimize a bit.

Part 4 - Software Tweaks

fsync

- Arch Resources: https://wiki.archlinux.org/index.php/Steam#Fsync_patch

- Performance Results: https://github.com/ValveSoftware/Proton/issues/2966

- (Also available in tkg kernels)

Announced by Valve in July 2019, fsync is a faster replacement for “esync,” which is an optimization that Wine applies, which can help with FPS in CPU-heavy games.

I really suck at explaining what it is generally and non-technically (something to do with using file descriptors for thread synchronization, and how there’s a limit on the number of FDs), mostly because I don’t want to read pages of Wine documentation, so if you want the technical details, see the post I linked.

To use it, you need a kernel which includes the fsync patches, and a version of Wine/Proton that knows to use fsync.

ACO

- (Available in mesa 19.3), enable with

RADV_PERFTEST=acoand use the RADV driver. (When you have different Vulkan drivers installed, you’ll need to exportVK_ICD_FILENAMESto point to the json for the driver you want to use)

Provided you’ve got a stable AMD card, this is where the buy starts to pay off.

Also In July 2019, Valve announced development of ACO, an alternative shader compiler for the RADV AMD driver.

It’s difficult to distill down why ACO is important. Let’s back up. There are main two open-source implementations of the AMD Vulkan driver. AMDVLK is the “official” open source Vulkan driver developed by AMD, and RADV is the mesa-maintained driver. Aside from outperforming AMDVLK in a good chunk of cases, RADV gets to take advantage of ACO instead of LLVM.

To quote the Valve announcement:

The AMD OpenGL and Vulkan drivers currently use a shader compiler that is part of the upstream LLVM project. That project is massive, and has many different goals, with online compilation of game shaders only being one of them. That can result in development tradeoffs, where improving gaming-specific functionality is harder than it otherwise would, or where gaming-specific features would often accidentally get broken by LLVM developers working on other things. In particular, shader compilation speed is one such example: it’s not really a critical factor in most other scenarios, just a nice-to-have. But for gaming, compile time is critical, and slow shader compilation can result in near-unplayable stutter.

So, ACO is feature-built for super fast compilation speed. This means less stutter in games due to shader compilation. Great. What’s next?

Since we’re translating all of these calls to Vulkan, we get to take advantage of one of its nice features - Layers.

Vulkan Layers

What if you want to get in-between the game and driver, to show performance data or modify the picture? On Windows, this might have been done previously via DLL hooking or a separate on-screen overlay application.

In Vulkan, inserting additional functionality into the rendering pipeline is built-in to the driver in the form of layers! The tools listed below are serious arguments for value-add when gaming on Linux, considering that they’re open source.

If you’re just using mesa and dxvk (which you are if you’re using Proton and an AMD card) there are actually some built-in overlays you can activate.

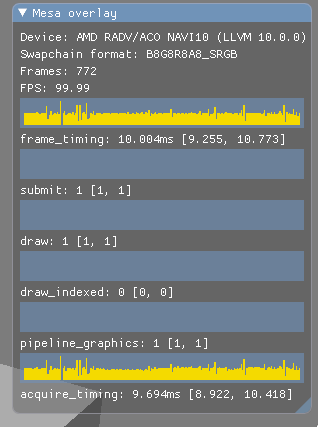

VK_LAYER_MESA_overlay

- (Available in Mesa, use

VK_INSTANCE_LAYERS=VK_LAYER_MESA_overlay)

This overlay shows some info that might not be immediately useful to everyone, though it does show FPS and a frametime graph, useful for debugging anything that might be getting in the way of your frames rendering.

DXVK_HUD

- (Available in DXVK, use

DXVK_HUD=fullor similar)

This overlay is a bit more useful, since it can spit out which driver you’re using. It also has the added bonus of popping up some text in the bottom left of the screen whenever shaders are compiling, so you can identify if your stuttering is because of that.

MangoHud

- GitHub: https://github.com/flightlessmango/MangoHud

- AUR: https://aur.archlinux.org/packages/mangohud/

use MANGOHUD=1

MangoHud is, to me, the most exciting of the three. Not only can it show frametimes with a graph, and FPS, it can also show GPU & CPU temp. It can also limit FPS (which can help with stuttering, if your GPU is producing frames faster than your refresh rate), and force VSync.

As an aside, I did some testing with Risk of Rain 2 via Proton with MangoHud. The game stutters on my setup with vsync turned off and no frame limiting imposed. Even when forcing vsync in the game, or forcing it on via MangoHud, it would still stutter. For some reason, the “Mailbox” vsync mode in MangoHud provided the best performance.

Finally, it can also dump performance logs, which can be uploaded to a site for comparison between different setups. I really recommend checking out flightlessmango.com - it gives a great sampling of what performance and visuals are like when you compare across different drivers & platforms.

Post Processing with vkBasalt

use ENABLE_VKBASALT=1

vkBasalt is another, very exciting tool in the Vulkan gaming toolbox. It provides some post processing effects like FXAA and Contrast Adaptive Sharpening.

Additionally, it supports the ability to use Reshade Fx shaders, a generic, open-source shader language & transcompiler. This could allow for Linux users to take advantage of some of the same great shader mods that Windows users have had for a while.

Take a look at a comparison video, since my explanation won’t do it justice:

4.5 Forks & Misc

Now let’s take a look at what the community has done, looking to improve performance and fixes.

GloriousEggroll’s Proton

Remember when I was talking about game-specific tweaks and hacks? If your game is particularly finicky, like Warframe or FFXIV, you might even need to use a fork of Proton. Luckily, it’s as easy as dropping a folder into ~/.steam/steam/compatibilitytools.d.

tk-glitch’s PKGBUILDs

If using a prebuilt, prepatched package is too easy for you, you might try looking at Tk-Glitch’s PKGBUILDs for proton, wine, the Linux kernel, and other things. If you’re running into an odd bug, chances are there’s a patch you can apply by compiling the package yourself. These builds make that easy.

Custom scheduler

I haven’t actually definitively benchmarked this for myself (though I use linux55-tkg with PDS), and it might be more helpful in more resource-starved systems, but the tk-glitch kernels have patches that can replace the default CPU scheduler with one that tolerates gaming workloads better, and can provide better and more consistent frametimes in some cases.

Check out this benchmark on FlightlessMango comparing the default scheduler to PDS to help decide if it’s something you’re interested in. If you are, you can compile one of the custom kernel PKGBUILDs with a custom scheduler.

Bleeding-Edge Mesa

Think you’re too cool for stable graphics drivers? Install mesa-git and watch your performance possibly go up by fractions of percents while you wonder why your games keep crashing.

yay -S clang-git libclc-git libdrm-git llvm-libs-git opencl-mesa-git vulkan-mesa-layer-git vulkan-radeon-git xf86-video-amdgpu-git lib32-mesa-git lib32-vulkan-radeon-git lib32-vulkan-mesa-layer-git mesa-git

Conclusion

I’ve tested all of these on my rig, and have really enjoyed experimenting with gaming on Linux. It’s made me even consider reading up on Vulkan and getting into graphics programming. I’ll stick with it for the foreseeable future, and maybe if AMD decides to write better drivers for Big Navi, I’ll be happier.

Until then, I hope this post helps illuminate how you might be able to get the most out of gaming on Linux in 2020!

Note: I’ve been on a journey to reduce frametimes and lag as much as possible for my games, and it’s lead me down a lot of paths to a rather disappointing conclusion. The overlays can show frametimes, and I can optimize all I want, but I can’t seem to squeeze the last few stutters out. Additionally, there’s no great way to debug what’s causing frametime issues for games on Linux. It’s usually some combination of CPU bottlenecking, your compositor running on top of the game, or some vsync fuckery. I just wish there was a way I could see what was causing frametime spikes - it’s my last hurdle to stutter-free gaming on Linux. There was a talk at XDC 2019 that gave me hope. (The answer might possibly just be to buy a variable refresh rate monitor…)

P.S. - VR

I’ve tried gaming in VR on Linux, since I own a Vive. SteamVR does support it, but getting it to work is like a house of cards for me. The SteamVR app itself isn’t stable, and it locks up my system half the time I try to use it. (Okay, yes, but that’s after I reverted from mesa-git)

Once everything actually launches, it’s not too bad, but frametimes feel way worse than a comparable Windows install on the same hardware. Additionally, if you thought compatibility for regular games is hard, try gaming in VR with Proton. I’d link all of the Reddit threads on /r/virtualreality_linux that I looked at trying to get things to work, but this one is pretty comprehensive.

We’ll see where this is in a year or two, but I’m not too hopeful - maybe if Half-Life: Alyx gets a ton of traction there will be a renewed focus on VR on Linux.

P.P.S - VRR

If you’ve read any of my past content, you know I have a G-Sync monitor as a holdover from owning a GTX 1080. I’ve yet to get a variable refresh rate monitor, but it could be the silver bullet that eliminates all the stuttering for me.

The big downside of variable refresh rate on Linux is that you can’t use multiple monitors as you can in Windows. This is because, as an Nvidia engineer puts it, VRR

“requires the application to be able to flip, which requires that its window be unoccluded, unredirected, and covering the entire desktop.”

If you’re using multiple monitors on Linux, chances are they form a single X screen, since you can drag windows between the monitors.

Though, as a last resort, I suppose I could configure two X screens…

P.P.P.S - Wayland

No. But we’re close.

Wayland compositors don’t support VRR yet.

Games must be run via XWayland.

But I’m hopeful.

Gamescope is an “experimental port of steamcompmgr as [a] Wayland compositor” which could have benefits for gaming within Wayland by removing unnecessary frame copies.

wine-wayland “allows playing DX9/DX11 and Vulkan games using pure wayland and Wine/DXVK.”

(Wow, that’s all, folks! Thanks for reading!)